Introduction

Retrieval Augmented Generation (RAG) support is live! - KAITO RagEngine uses LlamaIndex and FAISS, learn about it here! Latest Release: Sept 23th, 2025. KAITO v0.7.0.

First Release: Nov 15th, 2023. KAITO v0.1.0.

KAITO is an operator that automates the AI/ML model inference or tuning workload in a Kubernetes cluster. The target models are popular open-sourced large models such as falcon and phi-3.

Key Features

KAITO has the following key differentiations compared to most of the mainstream model deployment methodologies built on top of virtual machine infrastructures:

- Container-based Model Management: Manage large model files using container images with an OpenAI-compatible server for inference calls

- Preset Configurations: Avoid adjusting workload parameters based on GPU hardware with built-in configurations

- Multiple Runtime Support: Support for popular inference runtimes including vLLM and transformers

- Auto-provisioning: Automatically provision GPU nodes based on model requirements

- Public Registry: Host large model images in the public Microsoft Container Registry (MCR) when licenses allow

Using KAITO, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

Architecture

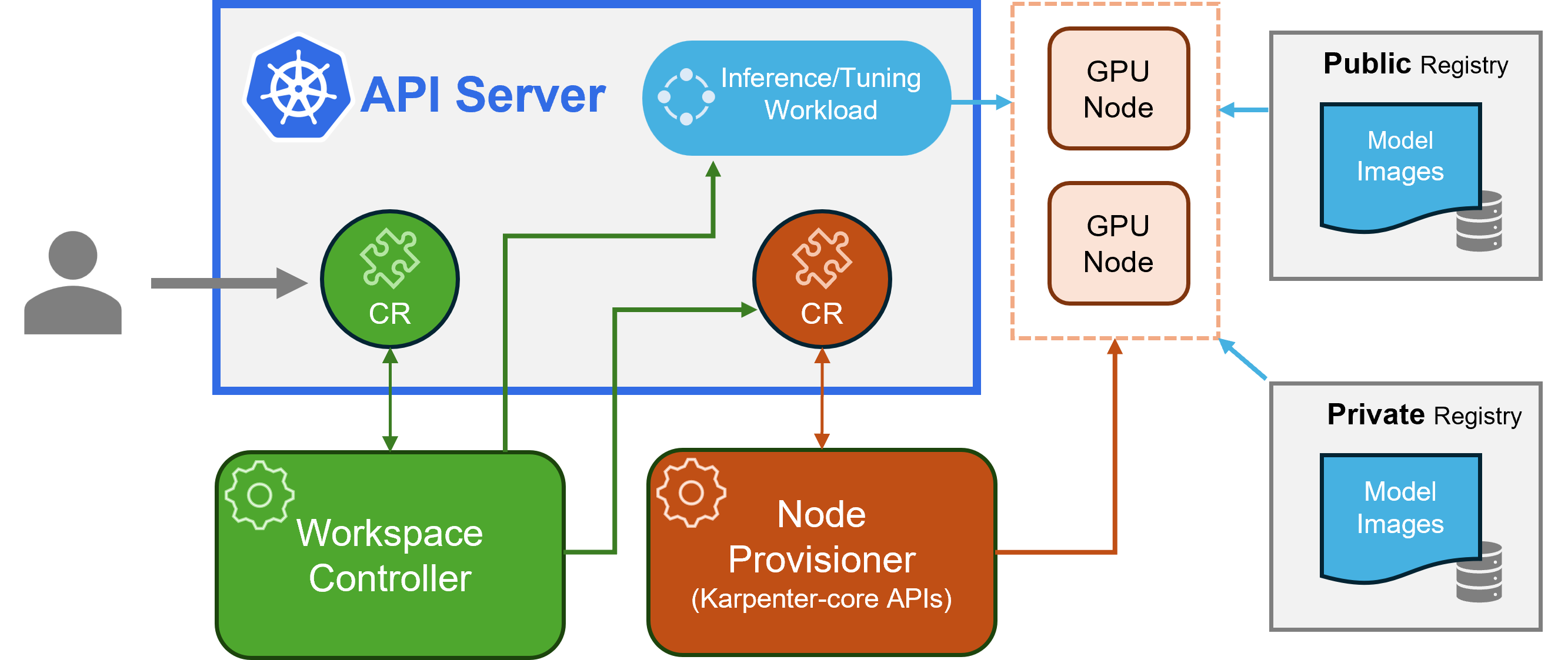

KAITO follows the classic Kubernetes Custom Resource Definition(CRD)/controller design pattern. Users manage a workspace custom resource which describes the GPU requirements and the inference or tuning specification. KAITO controllers automate the deployment by reconciling the workspace custom resource.

The above figure presents the KAITO architecture overview. Its major components consist of:

- Workspace controller: Reconciles the

workspacecustom resource, createsmachinecustom resources to trigger node auto provisioning, and creates the inference or tuning workload (deployment,statefulsetorjob) based on the model preset configurations. - Node provisioner controller: The controller's name is gpu-provisioner in gpu-provisioner helm chart. It uses the

machineCRD originated from Karpenter to interact with the workspace controller. It integrates with Azure Resource Manager REST APIs to add new GPU nodes to the AKS or AKS Arc cluster.

The gpu-provisioner is an open sourced component. It can be replaced by other controllers if they support Karpenter-core APIs.

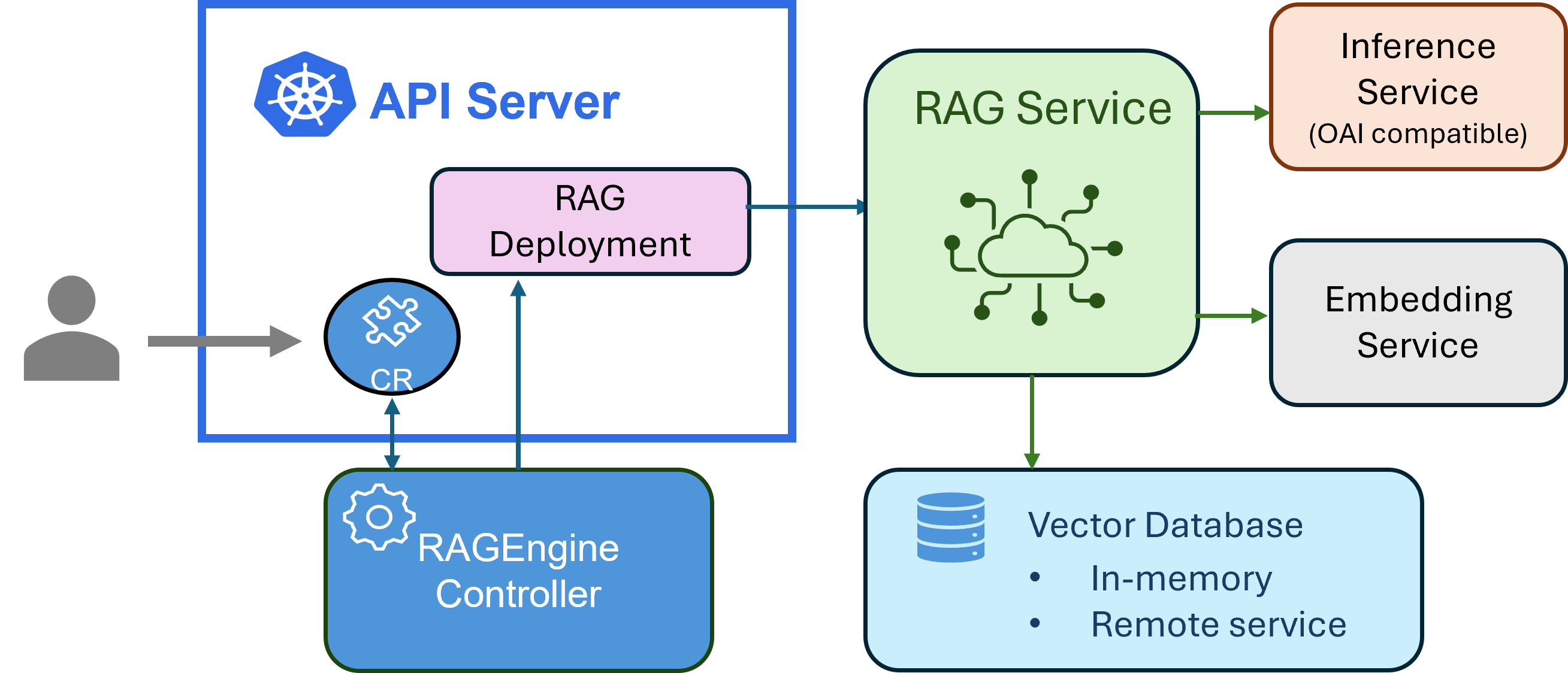

NEW! Starting with version v0.5.0, KAITO releases a new operator, RAGEngine, which is used to streamline the process of managing a Retrieval Augmented Generation(RAG) service.

As illustrated in the above figure, the RAGEngine controller reconciles the ragengine custom resource and creates a RAGService deployment. The RAGService provides the following capabilities:

- Orchestration: use LlamaIndex orchestrator.

- Embedding: support both local and remote embedding services, to embed queries and documents in the vector database.

- Vector database: support a built-in faiss in-memory vector database. Remote vector database support will be added soon.

- Backend inference: support any OAI compatible inference service.

The details of the service APIs can be found in this document.

Getting Started

👉 To get started, please see the Workspace Installation Guide and the RAGEngine Installation Guide!

👉 For a quick start tutorial, check out Quick Start!

Community

- GitHub: kaito-project/kaito

- Slack: Join #kaito channel in CNCF Slack

- Email: kaito-dev@microsoft.com